Alignment landscape, learned content

How might white-box methods fit into the Alignment Plan:

1. Model internal access during training and deployment

2. The promise of AI to empower

Within every research group working on ML models, we can decompose the workforce into such categories:

1. Data team (paying humans to generate data points)

2. Oversight team

3. Deployment of SGD-where-RLHF-is-the-algorithm team

RLHF is Reinforcement Learning from Human Feedback, and the problems with baseline RLHF are oversight and catastrophes. Current proposals that have these problems are:

1. using AIs to help oversee (oversight)

2. Adversarial training (catastrophes)

After reading Holden Karnofsky's post "[How might we align transformative AI if it’s developed very soon?]", we can conclude that the remaining problems for current ML models are:

1. Eliciting latent knowledge

2. Easier to detect fakes than to produce fakes. For ChatGPT at least, it is difficult to make fake inputs. It is a war between real AI input vs. fake AI input.

What is chain-of-thought-explicit-reasoning?

Models think rationally and step-by-step, for example, GPT-3 isn't doing either of these. This is a problem, even for narrow AIs.

Large Neural Networks may become power-seeking where:

1. fine-tuning LLMs with widely used RLHF goes awry

2. Deceptive AI and fewer requirements to prevent itself from being turned off

3. becoming a system where it requires the acquisition of power or resources to gain power

What does it mean relative to technical alignment research?

1. Interpretability

2. Benchmarking

3. Process-based supervision (instead of implicit optimization)

4. Scalable oversight (supply a reliable reward or training signal to AI systems that are more capable)

5. Elicit latent knowledge (how can we incentive a NN to tell us all the facts it knows relevant to a decision?)

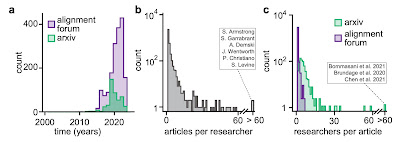

We need to look at scaling laws for compute, for data, and for model size more carefully because progress is shocking to professional forecasters. Metaculus predicts for a powerful AI (passing the multi-modal Turing test) within 9 years with a 25% chance. Technical alignment is still sprouting, and it is preparadigmatic--- there is no agreed strategy yet. What current alignment approach is the Swiss-Cheese approach--- combine fallible techniques and hope weaknesses will cancel out.

Comments

Post a Comment