AGI Fundamentals - Week 3 - Goal misgeneralisation

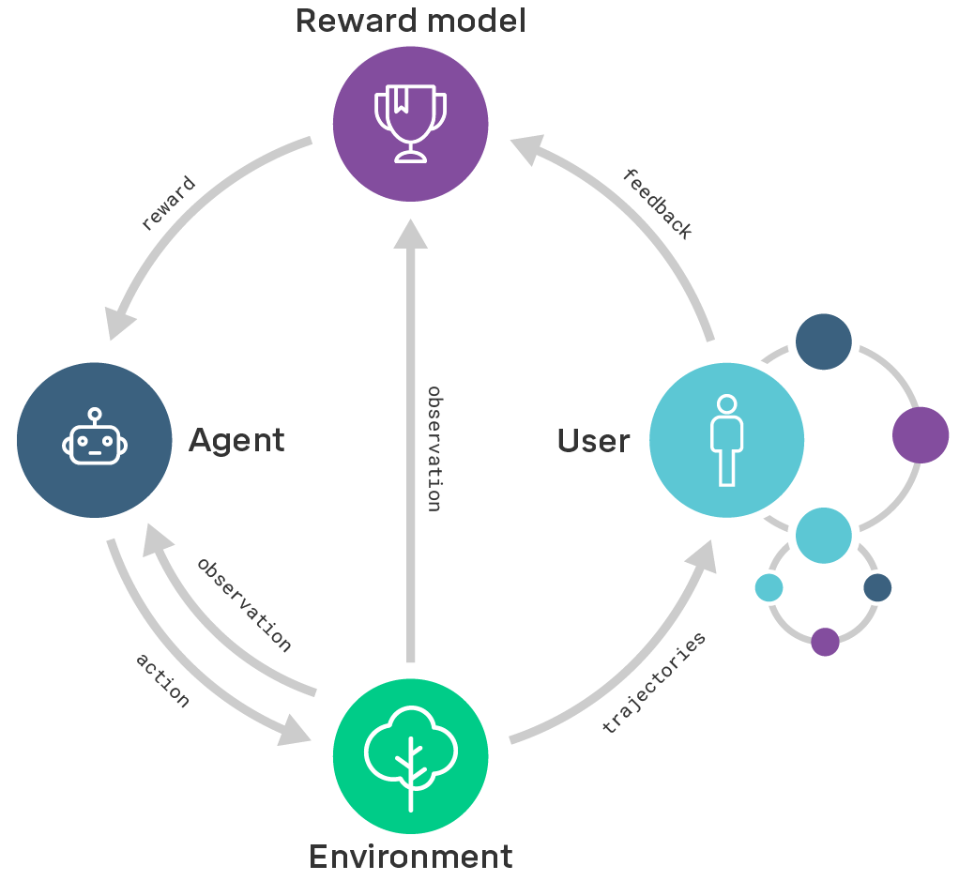

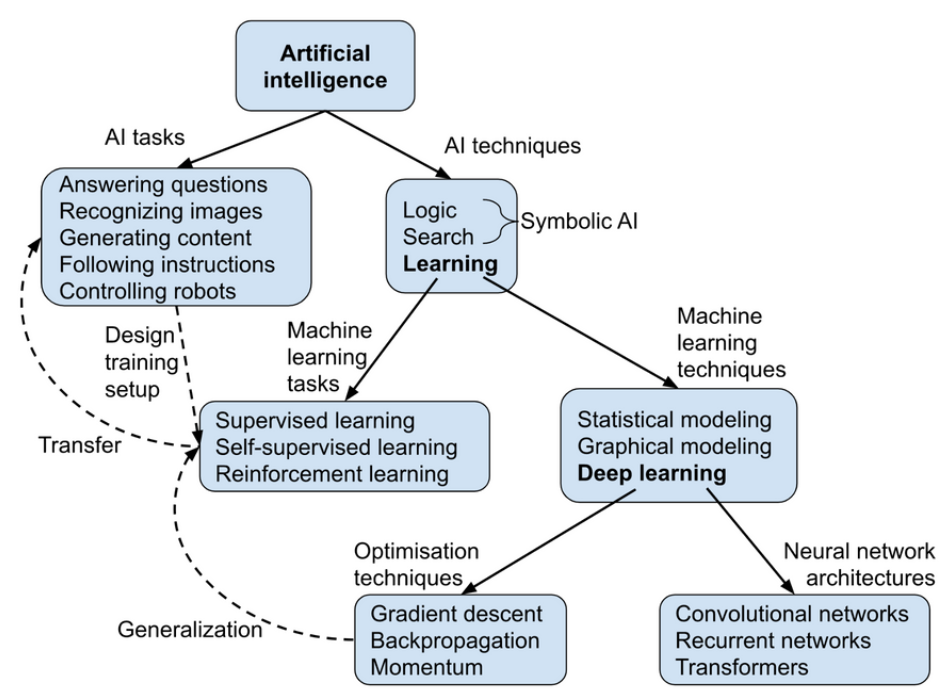

MiniTorch has been particularly helpful this week. I've been kept indoors by the sudden week-long downpour of rain. def goal misgeneralisation: when agents in new situations generalize to behaving in competent yet undesirable ways, because of learning the wrong goals from previous training Goal Misgeneralisation: Why Correct Specifications Aren't Enough For Correct Goals A typical system that tends to arrive at goal misgeneralisation by: 1. Training a system with a correct specification 2. The system only sees specification values on the training data 3. The system learns a policy 4. ... which is consistent with the specification on the training distribution 5. Under a distribution shift 6. ... The policy pursues an undesired goal Some function f maps input x as a member of set of inputs and y as a member of set of labels. In RL, X is the set of states or observation histories, and Y is the set of actions. A scoring function s will evaluate the performanc