The reason that I will not be attending EA Global Bay Area this February is that I do not have as strong an incentive to repeat EAGxBerkley activities & networking. I found my time better utilized consuming LessWrong posts, reading EA content, and producing content. I will be attending EAG London in May, up to 90% certainty.

I really do love tea lattes--- the stuff that makes my thinking go much faster than normal. I'd even like to think I am funnier after caffeine consumption, what a waste of a comedic genius pouring its guts out to instrumental convergence.

It would be super cool to find a way to attend NeuRIPS. I need someone to sponsor me. Bain's new client OpenAI might be my "in". Now I just need to get into Bain. *aggressively sips coffee*

It is here I took a longer break and went off to wonderland.

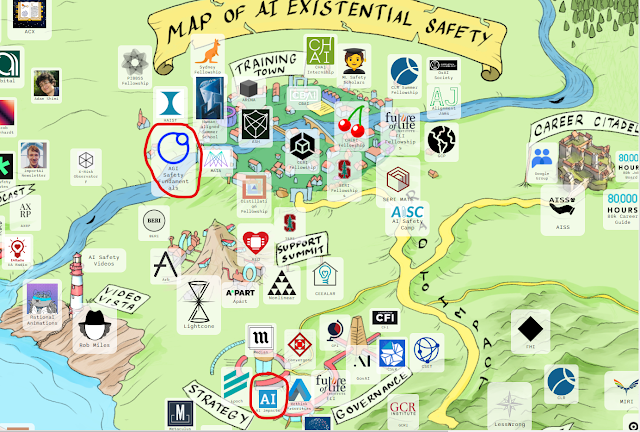

https://aisafetyideas.com/ is a visual representation of the alignment landscape, and how each AI safety entity is categorized. I see my current involvement! Super nicely done.

_____________________________________________________________

It's delightful to see AI Impact's presence in this paper--- Katja Grace's expert survey that gave median estimates of 2061 and 2059 for the year in which AI will outperform humans at all tasks. The three emergent properties in using RL to train an AGI are:

1. Deceptive reward hacking (defined above)

2. Internally-represented goals

3. Power-seeking behavior

**Deceptive Reward Hacking**

- If policies are rewarded for making money on the stock market, they might gain the most return via illegal market manipulation

- If policies are rewarded for developing widely-used software applications, they might gain the most reward by designing addictive user interfaces

An AGI is expected to develop *situational awareness*, where it is defined that the most capable policies are to become better at identifying which abstract knowledge is relevant to the context in which they are being run, and execute actions given such knowledge.

An internally-represented goal example is when InstructGPT's competent responses to questions its developers didn't intend it to answer (such as questions and answers about how to commit crime) was a result of goal misgeneralization.

Power seeking behavior section's key claim is that broadly-scoped misaligned goals lead policies to carry out power-seeking behavior:

1. many broadly-scoped goals incentivize power-seeking

2. power-seeking policies would choose high-reward behaviors for instrumental reasons

3. power-seeking AGIs could gain control of key levers of power

Alignment efforts to tackle reward misspecification is through RLHF, where it has been used to train policies to assist human supervisors. Goal misgeneralization-solving has an approach of red-teaming, or finding and training on unrestricted adversarial examples.

mechanistic interpretability starts from the level of individual neurons to build up an understanding of how networks function internally. Conceptual interpretability aims to develop automatic techniques for probing and modifying human-interpretable concepts in networks.

_____________________________________________________________

Why it is in a google drive escapes me. Anyways.

There are some instrumental goals likely to be pursued by almost any intelligent agent. The instrumental convergence thesis states: an instrumental value is by its attainment, would allow for more final goals being realized, ceteris paribus.

1. self-preservation is mainly future-concerning, where agents care intrinsically about their own survival

2. goal-content integrity (didn't understand)

3. Cognitive enhancement, to improve in rationality and ability to achieve final goals

4. Technological perfection, to create a wider range of structures more quickly and reliably

5. Resource acquisition- basic resources like time, space, matter, and free energy can be processed to serve goals

Author seems to really be possessive of the von Neumann probes [https://www.wikiwand.com/en/Self-replicating_spacecraft] and how a large portion of the observable universe can be gradually colonized. Cosmic expansion, frankly, reads like author has derailed. I quote: "the colonization of the universe would continue in this manner until the accelerating speed of cosmic expansion as a consequence of the positive cosmological constant makes further procurements impossible as remoter regions drift permanently out of reach."

__________________________________

I spoke to Alex this summer in Berkeley about CV, and on general alignment at MIRI in Orinda. I will need to take a deeper dive on this paper when I am less pressed for time.

Certain environmental symmetries can produce power-seeking incentives, enact finite rewardless markov decision process (MDP)

https://neurips.cc/virtual/2021/poster/28400

_________________________________________________________

What failure looks like

The creation of an optimization daemon is when we deliberately or accidentally crystallize into new modes of optimization. In terms of alignment, it means breaking goal alignment or other safety properties.

Decrease failure rate by:

1. having less optimization pressure, low enough to not create daemons

2. the agent is not Turing-complete therefore not possible to contain daemons no matter how much optimization pressure is placed. (ex. 3 layer nonrecurrent neural network containing less than a trillion neurons will not erupt daemons whatsoever)

3. AI only has subagents that are softly optimizing, and all its subordinates are capable to be aborted.

Comments

Post a Comment