AGISF - Week 6 - Interpretability

I'm blown away by how cool distill.pub is, definitely worth revisiting this spring break.

What is interpretability?

Develop methods of training capable neural networks to produce human-interpretable checkpoints, such that we know what these networks are doing and where to exert interference.

Mechanistic interpretability is a subfield of interpretability which aims to understand networks on the level of individual neurons. After understanding neurons, we can identify how they construct increasingly complex representations, and develop a bottom-up understanding of how neural networks work.

Concept-based interpretability focuses on techniques for automatically probing (and potentially modifying) human-interpretable concepts stored in representations within neural networks.

Feature Visualization (2017) by Chris Olah, Alexander Mordvintsez and Ludwig Schubert

A big portion of feature visualization is answering questions about what a netowork---or parts of a network--- are looking for by generating examples. Feature visualization by optimization is, to my understanding, taking derivatives wrt to their inputs and find out what kind of input corresponds to a behavior.

// why should we visualize by optimization?

It separates the things causing behavior from things that merely correlate with the causes. Optimization isolates the causes of behavior from mere correlations. A neuron may not be detecting what uou initially thought.

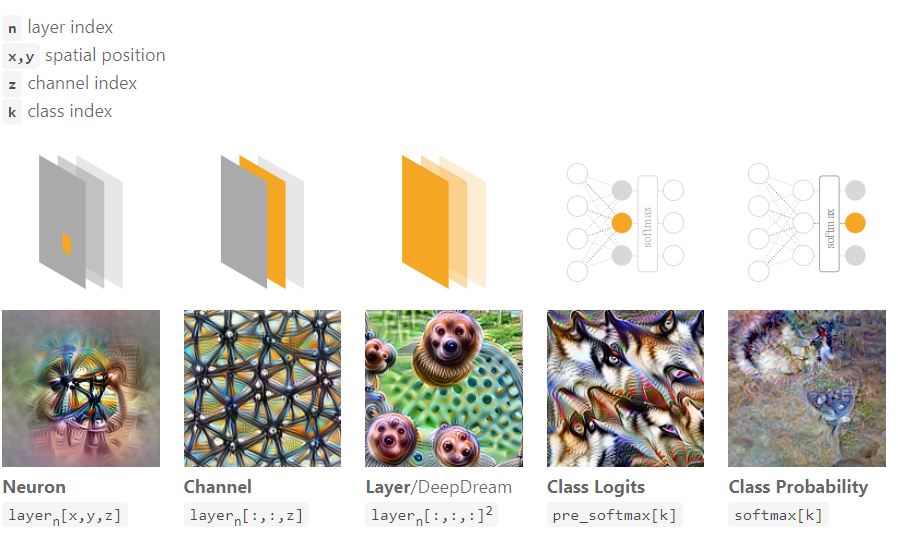

The hierarchy of Objective selection goes as follows:

1. Neuron (at an individual position)

2. Channel

3. Layer

4. Class Logits (before softmax)

see softmax

5. Class Probability (after softmax)

I didn't understand what interpolate meant, or interpolating in the latent space of generative models.

I think I got this part down pretty well--- regularization. Regularization is imposing structure by setting priors and constraints. There are three families of regularization:

1. Frequency penalization is penalizing nearby pixels that have too high of variance, or penalize blurring of the image after each iteration of the optimization step

2. Transformation robustness is tweaking the example slightly (rotate, add random noise, scale) and testing if the optimization target can still be activated--- hence, robustness

3. Learned Priors is jointly optimizing the gradient of probability and the probability of the class.

In conclusion, feature visualization is a set of techniques for developing a qualitative understanding of what different neurons within a network are doing.

Zoom In: An Introduction to Circuits by OpenAI

Science seems to be driven by zooming in such as zooming in to see cells and using X-ray crystallography to see DNA--- the works of interpretability can possibly be scrutinized.

Just as Schwann has three claims about cells--->

Claim 1: The cell is the unit of structure, physiology, and organization in living things.

Claim 2: The cell retains a dual existence as a distinct entity and a building block in the construction of organisms.

Claim 3: Cells form by free-cell formation, similar to the formation of crystals.

OpenAI offers three claims about NNs--->

Claim 1: Features are the fundamental unit of neural networks.

They correspond to directions. These features can be rigorously studied and understood.

Claim 2: Features are connected by weights, forming circuits. 2

These circuits can also be rigorously studied and understood.

Claim 3: Analogous features and circuits form across models and tasks.

Concepts backing up claim 1:

- substantial evidence saying that early layers contain features like edge or curve detectors, while later layers have features like floppy ear detectors or wheel detectors.

- curve detectors are capable of detecting circles, spirals, S-curves, hourglass, 3d curvature.

- high-low frequency detectors are capable of detecting boundaries of objects

- the pose-invariant dog head detector is an example of how the feature visualization is looking for and the dataset examples validate it

I've pretty much stared at Neuron 4b:409 all summer last year and it finally makes sense.

Concepts backing up claim 2:

- all neurons in our network are formed from linear combinations of neurons in the previous layer, followed by ReLU.

- Given an example of a 5x5 convolution, there should then be a 5x5 set of weights linking two neurons--- these weights can be +/-. The positive weight means if the earlier neuron fires in that position, it excites the late neuron. Negative weight inhibits.

- Superposition (car example) is where excitation for windows usually goes to the top and inhibition on the bottom, excitation for wheels on the bottom, and inhibition at the top.

- However, on a deeper level, the model was able to spread the car feature over a number of neurons that can also detect dogs. This is what it means to be a polysemantic neuron.

Concepts backing up claim 3:

- Universality, the third claim, says that analogous features and circuits form across models and tasks.

- Convergent learning is suggesting that different neural networks (for different features, for example) can develop highly correlated neurons

- Curve detectors is a low-level feature that seem to be common to vision model architectures (Alexnet, inceptionv1, vgg19, resnetv2- the 50 layer one)

Comments

Post a Comment