AGI Safety Fundamentals: Governance Week 1

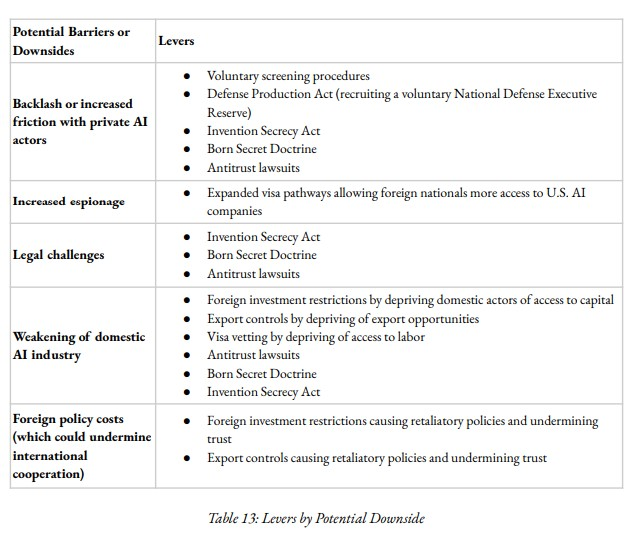

The AI governance landscape composes of series of standards and regulations that addresses catastrophic risks, AI-assisted bioterrorism, and AI-exacerbated conflict. Some example approaches to address AI risk are: model evaluation, hardware export controls, cautious coalition expansion, privacy-preserving verification mechanism treaties, lab governance, "CERN for AI".

I will be documenting my AI Safety Fundamentals: Governance course notes on a weekly basis. I will be posting on LessWrong also.

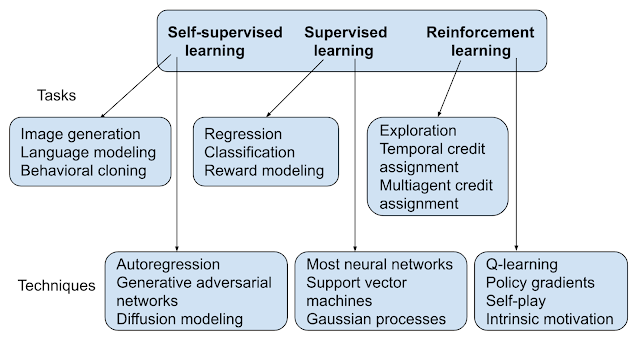

Modern AI's complexity is summarized as: Machine learning systems use computing power to execute algorithms that learn from data.

An example of Supervised Learning (SL)'s impact on national security is that US military's instatement of Project Maven (the creation of the Algorithmic Warfare Cross-Functional Team). It applied SL to photos and videos collected by US drones around the world, and used SL to predict equipment failures in special operations helicopters.

Common failure modes are segmentation and clustering inaccuracies, culminating into generation of disinformation in, say, as gravitational as modern geopolitics. CSET claims that "neither SL nor unsupervised learning (UL) excels at the long-term strategic assessment and planning integral to national security".

The most important component of data in the scope of a supervised learning system is the accuracy of the right answer in the training data, AKA training data bias. (See The Alignment Problem by Brian Christian).

From an OpenAI study, the amount of compute applied to the training of top AI projects increased by a factor of 300,000. We can attribute this success to ML chip's parallelized computing, a 2012-present phenomenon.

The article emphasizes on AI triad's way on framing and informing national security policy, to help develop intuitions. The comparative policy importance of each part has implications for the national security strategy; when one part of the triad is of a higher priority than others, different policy prescriptions follow.

So if research talent and resources to develop algorithms is below national demands, the policy levers are visa controls, industrial strategies, worker retraining, certification frameworks for AI skills, and educational investments to meet AI faculty and teacher shortages.

A broad overview of AI development, visualized. An example that I liked was that Richard Ngo points out that AI reaching human-level performance at the board game Diplomacy (similar game to Risk) required language-based negotiations to form alliances and deceiving other players when betraying alliances.

Deepmind's AlphaCode system was trained to solve competitive programming problems, placing in the top 54% of participating humans. AlphaTensor's new algorithm for matrix multiplication was faster than any designed by humans.

It seems imperative to me that there should be more efforts being put in for alignment research, which is why EA urges wide public exposure to transformative technology.

Comments

Post a Comment