AGISF - Week 6 - Interpretability

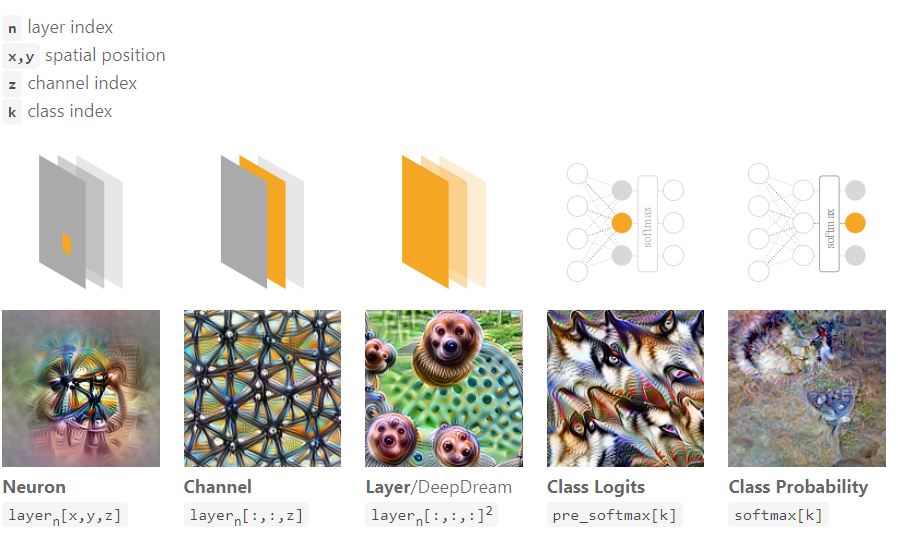

I'm blown away by how cool distill.pub is, definitely worth revisiting this spring break. What is interpretability? Develop methods of training capable neural networks to produce human-interpretable checkpoints, such that we know what these networks are doing and where to exert interference. Mechanistic interpretability is a subfield of interpretability which aims to understand networks on the level of individual neurons. After understanding neurons, we can identify how they construct increasingly complex representations, and develop a bottom-up understanding of how neural networks work. Concept-based interpretability focuses on techniques for automatically probing (and potentially modifying) human-interpretable concepts stored in representations within neural networks. Feature Visualization (2017) by Chris Olah, Alexander Mordvintsez and Ludwig Schubert A big portion of feature visualization is answering questions about what a netowork---or parts of a network--- are...